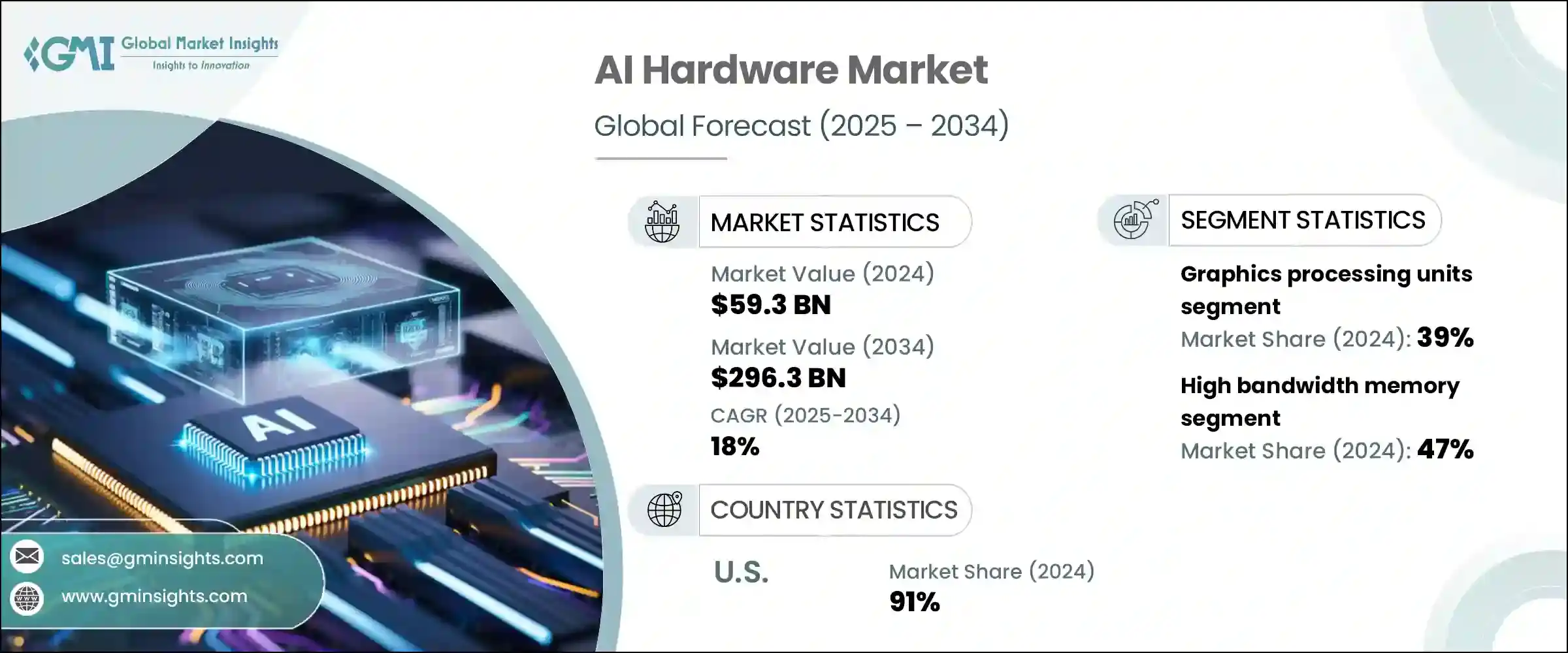

AI Hardware Demand Surges Across Global Markets

AI Hardware Demand Surges Across Global Markets

The artificial intelligence revolution, once a concept confined to research labs and science fiction, is now a tangible force reshaping every facet of our economy and daily lives. This seismic shift, supercharged by the advent of generative AI and large language models, is creating a tidal wave of demand for a critical resource: specialized AI hardware. The engines powering this new industrial era are not just lines of code, but sophisticated physical components designed to process complex mathematical computations at unimaginable speeds. This in-depth analysis explores the unprecedented surge in global AI hardware demand, delving into the key drivers, the major players and their cutting-edge technologies, the diverse ecosystem beyond chips, the formidable challenges, and the future trajectory of this foundational market.

A. The Core Catalyst: Understanding the AI Hardware Boom

To comprehend the current market frenzy, one must first understand what AI hardware is and why it is so distinct from traditional computing components. At its essence, AI hardware refers to specialized processors and systems engineered to accelerate artificial intelligence workloads, particularly those involving deep learning and neural networks.

A. The Computational Divide: CPUs vs. AI Accelerators

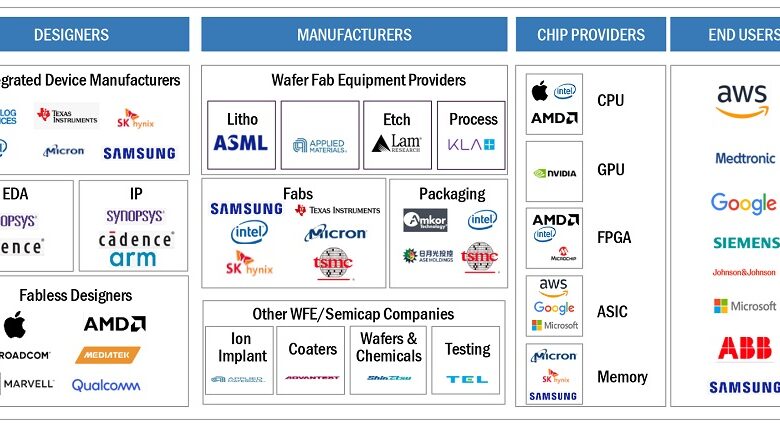

Traditional Central Processing Units (CPUs) are the generalists of the computing world. They are designed to handle a wide range of tasks sequentially and are excellent for running operating systems and everyday applications. However, the core operation of neural networks—Matrix Multiplication and Floating-Point Operations (FLOPS)—is a massively parallelizable task. AI accelerators, such as Graphics Processing Units (GPUs), Tensor Processing Units (TPUs), and other Application-Specific Integrated Circuits (ASICs), are designed with thousands of smaller, efficient cores that can perform these calculations simultaneously. This parallel architecture makes them orders of magnitude faster and more power-efficient for AI training and inference than conventional CPUs.

B. The Generative AI Inflection Point

The public release of models like OpenAI’s ChatGPT served as a global demonstration of AI’s capabilities, triggering an arms race among corporations worldwide. Every tech giant, startup, and enterprise suddenly needed to either build, fine-tune, or deploy large AI models. Training a single advanced generative AI model can require thousands of specialized chips running non-stop for weeks or even months, consuming computational power equivalent to that used by thousands of households over years. This single application has created a voracious, insatiable appetite for high-performance computing power.

C. The Pervasive Expansion of AI Applications

The demand is not limited to generative AI. The proliferation of AI is now ubiquitous across industries:

-

Healthcare: For medical imaging analysis, drug discovery, and personalized medicine.

-

Finance: For high-frequency trading algorithms, fraud detection, and risk assessment.

-

Automotive: For the development of autonomous driving systems, which rely on real-time sensor data processing.

-

Retail: For recommendation engines, supply chain optimization, and inventory management.

This widespread integration means that AI computation is no longer a niche need but a core utility, much like electricity, driving sustained, long-term demand for the hardware that powers it.

B. Key Market Drivers Fueling the Unprecedented Demand

The surge in AI hardware demand is not a random event; it is the direct result of several powerful, converging macroeconomic and technological trends.

A. The Exponential Growth of Data and Model Size

We are living in the age of big data, where the total volume of digital information created is growing exponentially. Concurrently, AI models themselves are ballooning in size, evolving from millions to trillions of parameters. These “frontier models” require correspondingly massive datasets for training. The relationship is simple: larger models + more data = a monumental increase in the required computational resources. This phenomenon, often described by OpenAI’s research on the scaling laws, directly translates to a need for more powerful and numerous AI chips.

B. The Shift from Cloud to Edge AI Computing

While massive data centers handle the training of AI models, there is a rapidly growing need to run AI inference—the process of using a trained model to make predictions—closer to where data is generated. This is known as edge computing. Applications like real-time speech translation on smartphones, quality control cameras in manufacturing plants, and driver-assistance systems in cars cannot afford the latency of sending data to a distant cloud server. This requires a new class of power-efficient, specialized AI hardware to be embedded directly into edge devices, creating a massive secondary market beyond the data center.

C. Intensifying Global Competition and National Security Interests

AI supremacy is now viewed as a critical component of economic competitiveness and national security. Governments worldwide, including the United States, China, and the European Union, are investing heavily in domestic AI capabilities. This has led to state-sponsored initiatives to fund AI research and development, including the design and manufacturing of sovereign AI chips. Furthermore, export controls and geopolitical tensions have spurred countries to build self-reliant semiconductor supply chains, further intensifying the race and investment in this sector.

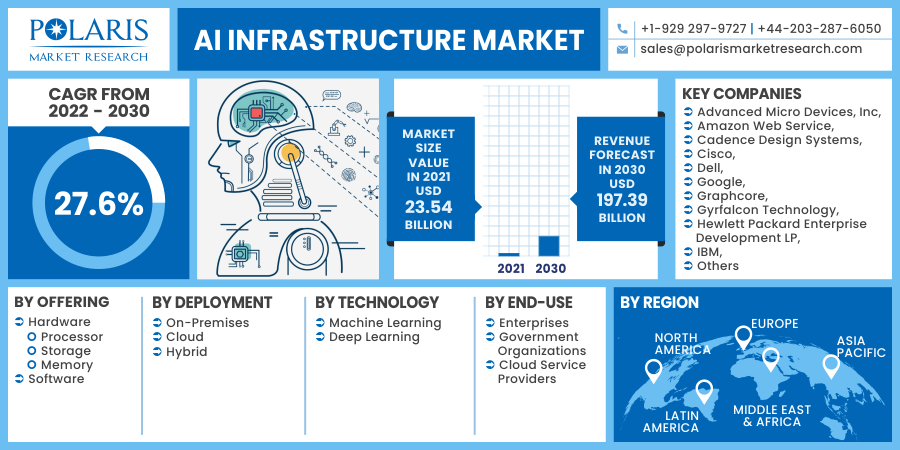

D. The Democratization of AI Development

Cloud platforms like AWS, Google Cloud, and Microsoft Azure have made powerful AI hardware accessible to virtually anyone. Through Infrastructure as a Service (IaaS) and AI-specific platform services, startups and researchers can rent time on clusters of thousands of GPUs without a massive capital outlay. This democratization has unlocked a wave of innovation from smaller players, who collectively represent a huge portion of the demand pie, consuming cloud computing resources at an accelerating rate.

C. The Architectural Evolution: A Detailed Look at AI Hardware Types

The term “AI hardware” encompasses a diverse and evolving landscape of architectures, each optimized for different tasks and performance profiles.

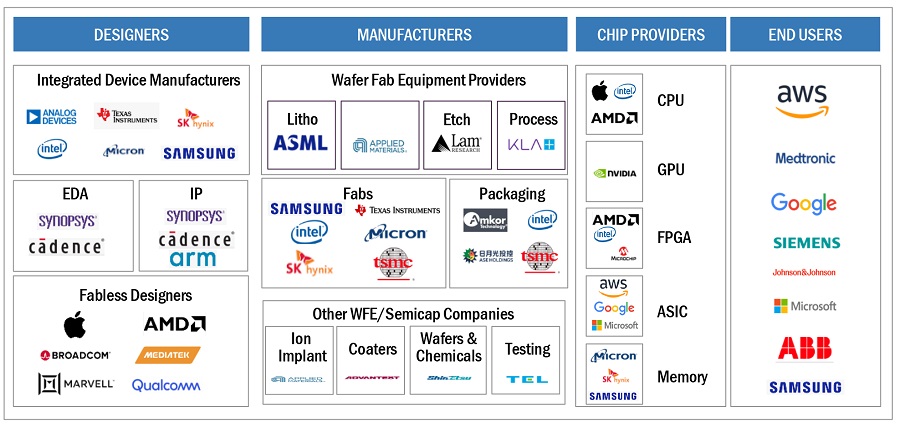

A. Graphics Processing Units (GPUs): The Workhorse of AI

Initially designed for rendering video game graphics, GPUs have become the undisputed workhorses of modern AI. Their massively parallel architecture makes them exceptionally well-suited for the matrix and vector calculations fundamental to neural networks. Nvidia, with its CUDA software ecosystem and relentless innovation in its data center GPUs (like the H100 and Blackwell platforms), has established a dominant position. Competitors like AMD are challenging with their MI300 series Instinct accelerators, but Nvidia’s first-mover advantage and robust software stack have made its GPUs the gold standard for AI training.

B. Tensor Processing Units (TPUs) and Other ASICs

Application-Specific Integrated Circuits (ASICs) are custom-designed chips built for a very specific purpose. Google’s Tensor Processing Unit (TPU) is a prime example, optimized specifically for TensorFlow-based operations. ASICs can achieve unparalleled performance and energy efficiency for their targeted workloads, surpassing even GPUs. The trade-off is a lack of flexibility; they are less effective for tasks outside their design parameters. Their development represents a significant investment but is a key strategy for hyperscalers like Google to gain a performance-per-dollar advantage at scale.

C. Field-Programmable Gate Arrays (FPGAs)

FPGAs are integrated circuits that can be configured and reconfigured by a customer or a designer after manufacturing. This offers a middle ground between the flexibility of a CPU and the performance of an ASIC. They are highly efficient for specific, fixed algorithms and are widely used in networking and embedded vision systems where low latency is critical. Companies like Intel have a strong presence in this segment with their Agilex and Stratix families.

D. Neuromorphic Chips and Quantum Computing (The Future Frontier)

Looking further ahead, researchers are exploring radically different architectures. Neuromorphic chips are designed to mimic the structure and function of the human brain, using networks of “spiking neurons” to process information with extreme energy efficiency. While still largely in the research phase, they hold promise for a new paradigm in AI computation. Similarly, quantum computing, though in its infancy, has the potential to solve certain classes of optimization and machine learning problems that are intractable for even the most powerful classical computers today.

D. The Competitive Landscape: Titans and Challengers in the AI Chip Arena

The race for AI hardware dominance is being fought by a mix of established giants, ambitious challengers, and vertical integrators.

A. Nvidia Corporation: The Reigning Champion

Nvidia’s foresight in developing the CUDA parallel computing platform over a decade ago has paid off spectacularly. Its combination of state-of-the-art silicon (GPUs) and a comprehensive, easy-to-use software ecosystem has created a powerful virtuous cycle. Developers build on CUDA, which locks them into the Nvidia hardware platform, which in turn funds more R&D. Its data center GPUs are the most sought-after components in the market, and the company has effectively expanded its identity from a graphics company to the foundational platform for the AI industry.

B. Advanced Micro Devices (AMD): The Formidable Contender

Under the leadership of Dr. Lisa Su, AMD has staged a remarkable comeback and is now Nvidia’s most direct competitor. Its Instinct series of accelerators, particularly the MI300, offers compelling performance. AMD’s strategy involves building an open software ecosystem, ROCm, to challenge Nvidia’s CUDA dominance. Its acquisition of Xilinx has also bolstered its capabilities in the FPGA space, making it a full-stack provider of compute solutions.

C. The Hyperscalers: Designing Their Own Destiny

Not content to rely solely on merchant vendors, tech giants like Google, Amazon, and Microsoft are designing their own custom AI chips.

-

Google: Has multiple generations of TPUs powering its internal services and cloud offerings.

-

Amazon (AWS): Developed the Inferentia chip for high-performance, low-cost inference and the Trainium chip for training.

-

Microsoft: Has unveiled its first custom AI accelerator, Maia, and a CPU named Cobalt, signaling its deep vertical integration ambitions.

This trend, known as “silicon customization,” allows these companies to optimize their hardware perfectly for their specific software workloads and reduce their dependence on external suppliers.

D. The Specialized Startups and Legacy Players

A vibrant ecosystem of startups, such as Cerebras Systems with its record-breaking Wafer-Scale Engine and SambaNova Systems, is pushing architectural boundaries. Meanwhile, legacy semiconductor leader Intel is fighting to regain relevance with its Gaudi accelerators and by leveraging its formidable manufacturing and packaging technologies.

E. The Ripple Effect: Challenges and Implications of the Hardware Crunch

The soaring demand for AI hardware is creating significant ripple effects across the global economy and presenting formidable challenges.

A. Severe Supply Chain Constraints and Manufacturing Bottlenecks

The advanced fabrication of these chips is one of the most complex manufacturing processes on Earth. It relies on companies like TSMC and Samsung Foundry, which operate at near-full capacity. The lead time for acquiring high-end AI chips can extend to many months, creating a significant bottleneck for AI development and deployment. Building new semiconductor fabs is a multi-billion-dollar, multi-year endeavor, meaning supply constraints are likely to persist.

B. Soaring Costs and Intensifying Capital Expenditure

The high demand and limited supply have driven up the cost of AI hardware. A single server rack equipped with the latest GPUs can cost millions of dollars. This is forcing companies to make difficult budgetary decisions and is concentrating immense AI power in the hands of a few well-capitalized tech behemoths, potentially stifling competition from smaller entities.

C. The Critical Software and Ecosystem Lock-In

Hardware is only half the battle. The value is in the full-stack solution. Nvidia’s dominance is as much about its CUDA software as it is about its silicon. This creates a significant ecosystem lock-in, where the cost of switching to a competitor’s hardware (which may require rewriting and retraining models) is prohibitively high. Breaking this lock-in is the primary challenge facing Nvidia’s rivals.

D. The Unsustainable Energy Consumption and Environmental Impact

AI data centers are incredibly power-hungry. Training a single large AI model can consume more electricity than a hundred homes use in a year. As AI scales, its environmental footprint becomes a critical concern. The next frontier of innovation in AI hardware will not just be about raw performance, but also about performance per watt—achieving more computation with less energy. This is driving research into more efficient chip designs, advanced cooling technologies, and the use of renewable energy sources for data centers.

F. The Future Trajectory: Where is AI Hardware Headed?

The evolution of AI hardware is accelerating, promising a future of even greater specialization and integration.

A. The Rise of Heterogeneous Computing and Chiplets

The future of high-performance computing lies in heterogeneity—integrating different types of cores (CPU, GPU, ASIC) on a single package or within a tightly coupled system. The “chiplet” model, where smaller, specialized dies are interconnected on a single package, is gaining traction. This allows manufacturers to mix and match components for optimal performance and yield, reducing costs and accelerating development cycles.

B. The Proliferation of Domain-Specific Architectures (DSAs)

As the field matures, we will see a move away from general-purpose AI accelerators toward architectures designed for very specific domains, such as AI for drug discovery, robotics, or specific types of generative models. These DSAs will offer unmatched efficiency for their target applications.

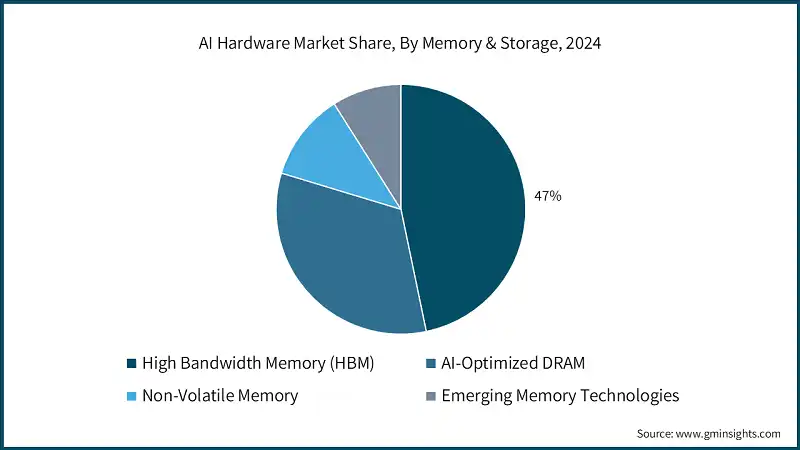

C. The Integration of Photonics and Advanced Packaging

To overcome the physical limitations of electrical signaling, researchers are exploring silicon photonics—using light instead of electrons to transmit data within and between chips. This could dramatically reduce power consumption and increase bandwidth. Furthermore, advanced 3D packaging technologies will allow for denser, faster, and more efficient integration of compute and memory.

D. The Focus on the Full-Stack AI System

The competition will increasingly shift from individual chips to full-stack solutions. Winners will be those who can best co-design their hardware and software, optimize the entire data center for AI workloads (including networking and storage), and provide developers with the most seamless and powerful platform to build upon.

Conclusion: Building the Foundations of an Intelligent Future

The soaring demand for AI hardware is a clear indicator that the age of artificial intelligence is not a distant promise but a present reality. The specialized chips, systems, and data centers being built today are the fundamental infrastructure of our future economy, akin to the railroads and power grids of centuries past. While the market faces significant challenges related to supply, cost, and sustainability, the relentless pace of innovation promises a new generation of hardware that is more powerful, efficient, and accessible. For businesses, developers, and policymakers, understanding this dynamic landscape is no longer optional; it is essential for navigating and thriving in the intelligent world that is being built, one transistor at a time.