Building Scalable AI Infrastructure Systems

A. The Foundation of Intelligent Systems: Why AI Infrastructure Matters

The transformative power of Artificial Intelligence is no longer a future promise but a present-day competitive imperative across every industry. However, the journey from a conceptual AI model to a production-grade intelligent system is paved with significant technological challenges, the greatest of which is building the right underlying infrastructure. AI infrastructure encompasses the entire ecosystem of hardware and software required to develop, train, deploy, and maintain machine learning models effectively at scale. It is the unglamorous, yet critically important, engine room of any successful AI initiative. A robust AI infrastructure is not merely about raw computing power; it is a holistic architecture designed for the unique, data-intensive, and computationally explosive demands of machine learning workloads. This comprehensive guide will dissect the core components of a modern AI infrastructure, providing a detailed blueprint for organizations to build a scalable, efficient, and future-proof foundation for their intelligent applications.

The consequences of neglecting infrastructure are severe: projects stalled by endless training times, models that cannot handle real-world data loads, exorbitant cloud bills, and the inability to iterate quickly. Conversely, a well-architected AI infrastructure accelerates experimentation, reduces time-to-market for new capabilities, controls costs, and ensures that AI models perform reliably in production. We will explore the critical requirements across compute, storage, networking, and software, offering strategic insights for both on-premises and cloud-based deployments.

B. Deconstructing the AI Workload Pipeline

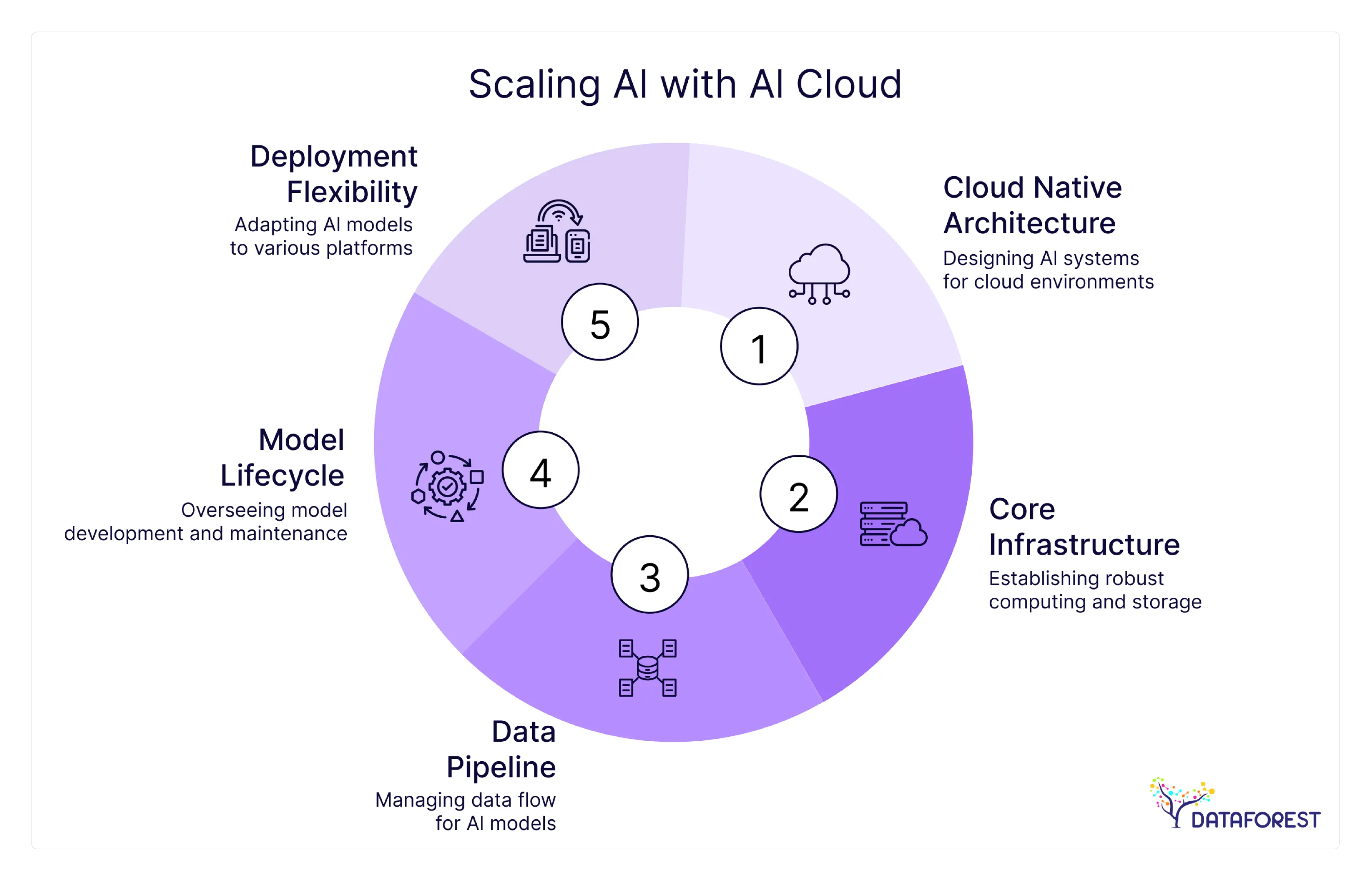

To understand infrastructure needs, one must first understand the distinct phases of the AI lifecycle, each with its own unique demands on the system.

A. The Data Preparation and Ingestion Phase

Before any model can be trained, data must be collected, stored, and prepared. This phase is often the most underestimated in terms of infrastructure complexity.

-

Characteristics: High I/O operations, significant storage capacity needs, and data transformation processing.

-

Key Activities: Data labeling, cleaning, augmentation, and feature engineering.

B. The Model Training and Experimentation Phase

This is the most computationally intensive phase, where the model learns patterns from the prepared data.

-

Characteristics: Extremely high parallel computing demands, massive memory bandwidth requirements, and sustained workloads that can run for days or even weeks.

-

Key Activities: Running gradient descent algorithms, adjusting millions or billions of parameters, and hyperparameter tuning.

C. The Model Inference and Serving Phase

Once trained, the model is deployed to make predictions on new, unseen data.

-

Characteristics: Requires low-latency response times, high throughput for concurrent requests, and high availability. The compute requirements per prediction are lower than training, but the scale can be enormous.

-

Key Activities: Processing API requests, generating predictions (e.g., classifying an image, generating text), and returning results.

C. Core Hardware Components: Building the AI Engine

The choice of hardware is the most consequential decision in building AI infrastructure, directly impacting performance, cost, and scalability.

A. Compute: The Heart of AI Processing

The Central Processing Unit (CPU) is no longer sufficient for deep learning. Specialized processors are essential.

-

Graphics Processing Units (GPUs):

-

Why GPUs? Their massively parallel architecture, with thousands of smaller cores, is perfectly suited for the matrix and vector operations that underpin neural network training and inference.

-

Key Players:

-

NVIDIA: The undisputed market leader with its CUDA software ecosystem and powerful GPUs like the H100 and A100 for data centers, and the RTX 4090 for workstations.

-

AMD: A strong competitor with its Instinct series (e.g., MI300X) and open ROCm software platform.

-

-

Considerations: VRAM capacity is critical. Larger models require more VRAM to fit during training. For example, training a large language model may require multiple GPUs with 80GB of VRAM each.

-

-

Tensor Processing Units (TPUs) and Other ASICs:

-

These are application-specific integrated circuits custom-built by companies like Google and Amazon for accelerating machine learning workloads. They can offer superior performance and efficiency for specific model types but are less flexible than general-purpose GPUs.

-

B. Storage: Fueling the AI Data Pipeline

AI models are voracious consumers of data. Slow storage can create a critical bottleneck, leaving expensive GPUs idle.

-

Performance Requirements: AI workloads require high-throughput and low-latency storage.

-

Recommended Solutions:

-

All-Flash NVMe Arrays: NVMe SSDs connected via PCIe offer the highest I/O performance for training on large, hot datasets.

-

Distributed File Systems and Object Storage: Solutions like WEKA, Lustre, and cloud-based object stores (AWS S3, Google Cloud Storage) are ideal for managing petabytes of training data. They provide scalability and parallel access.

-

-

Storage Tiering: A common strategy is to use a high-performance NVMe tier for active training datasets and a larger, cheaper object storage tier for archiving cold data and model checkpoints.

C. Networking: The Circulatory System of AI Clusters

For multi-node training, the network connecting servers becomes the determinant of performance.

-

The Bottleneck Problem: During distributed training, GPUs constantly synchronize gradients and parameters across nodes. A slow network can severely slow down the entire process.

-

High-Speed Interconnects:

-

InfiniBand: The gold standard for AI clusters, offering extremely low latency and high bandwidth (e.g., 400 Gb/s). NVIDIA’s NVLink switch further enhances GPU-to-GPU communication within and between servers.

-

High-Speed Ethernet: RoCE (RDMA over Converged Ethernet) can be a cost-effective alternative, providing some of the benefits of InfiniBand on Ethernet networks.

-

D. Software and Platform Ecosystem: Orchestrating Intelligence

The hardware is useless without a sophisticated software stack to manage, orchestrate, and simplify the AI development process.

A. The MLOps Platform: The Operating System for AI

MLOps (Machine Learning Operations) is a practice that aims to unify ML system development and ML system operation.

-

Version Control for Data and Models: Tools like DVC (Data Version Control) and Pachyderm manage datasets and model versions alongside code.

-

Experiment Tracking: Platforms like MLflow, Weights & Biases, and Comet.ml log experiments, parameters, metrics, and outputs, enabling reproducibility and collaboration.

-

Model Registry: A centralized repository to store, version, and manage model lineages from staging to production.

-

Automated Pipelines: Orchestration tools like Kubeflow Pipelines and Airflow automate the end-to-end ML lifecycle, from data ingestion to model deployment.

B. Containerization and Orchestration

Modern AI infrastructure is built on containers and Kubernetes.

-

Docker: Packages code, libraries, and dependencies into portable containers, ensuring consistency from a developer’s laptop to a training cluster.

-

Kubernetes: The industry-standard system for automating deployment, scaling, and management of containerized applications. It is ideal for managing diverse AI workloads, from Jupyter notebooks for experimentation to scalable inference endpoints.

C. AI Frameworks and Libraries

These are the foundational tools used by data scientists to build models.

-

PyTorch and TensorFlow: The two dominant open-source frameworks for deep learning. PyTorch is favored for its flexibility and research-friendliness, while TensorFlow excels in production deployment.

-

Hugging Face Transformers: An essential library providing thousands of pre-trained models for Natural Language Processing (NLP), drastically reducing development time.

E. Architectural Deployment Models: On-Premises vs. Cloud

Organizations must choose where to host their AI infrastructure, a decision with major strategic implications.

A. Cloud AI Services (AWS, Google Cloud, Azure)

-

Pros:

-

Instant Access and Scalability: No upfront capital expenditure; scale GPU resources up or down in minutes.

-

Managed Services: Access to pre-configured AI services (e.g., SageMaker, Vertex AI, Azure ML) that abstract away infrastructure management.

-

Latest Hardware: Continuous access to the newest generations of GPUs and TPUs.

-

-

Cons:

-

Long-Term Cost: Can become very expensive for sustained, high-volume workloads.

-

Data Egress Fees: Costly to move large datasets out of the cloud.

-

Potential Vendor Lock-in: Deep integration with a cloud provider’s proprietary services.

-

B. On-Premises / Private AI Cloud

-

Pros:

-

Data Control and Security: Ideal for organizations with sensitive data bound by strict compliance or sovereignty requirements.

-

Predictable Costs: High upfront CapEx but lower long-term TCO for predictable, continuous workloads.

-

Performance: Can optimize the entire stack (compute, storage, networking) for lowest latency and highest throughput.

-

-

Cons:

-

High Initial Investment: Requires significant capital to purchase and set up hardware.

-

Maintenance Overhead: Requires an in-house team to manage and maintain the infrastructure.

-

Hardware Refresh Cycles: Risk of technological obsolescence.

-

C. The Hybrid Approach

Many enterprises adopt a hybrid model, using the cloud for experimentation and burst training capacity while running core inference workloads and housing sensitive data on-premises.

F. A Strategic Implementation Roadmap

Building AI infrastructure is a journey. Follow this phased approach.

A. Phase 1: Foundation and Experimentation (Months 1-3)

-

Start in the Cloud: Begin with cloud GPU instances (e.g., NVIDIA A100 on AWS) and managed notebooks.

-

Establish MLOps Basics: Implement experiment tracking and model registry.

-

Focus: Enable a small team of data scientists to prototype and validate AI use cases.

B. Phase 2: Scaling and Production (Months 4-12)

-

Build Orchestrated Pipelines: Implement Kubeflow or Airflow for automated training and deployment.

-

Evaluate Hybrid Needs: If data gravity or cost becomes an issue, begin planning an on-premises GPU cluster.

-

Focus: Transition successful prototypes into reliable, monitored production services.

C. Phase 3: Optimization and Enterprise Scale (Year 2+)

-

Deploy Dedicated AI Cluster: For core workloads, invest in an on-premises or co-located cluster with InfiniBand and high-performance storage.

-

Implement Advanced MLOps: Incorporate automated retraining, drift detection, and full lifecycle management.

-

Focus: Achieve maximum performance, efficiency, and governance across the entire AI portfolio.

G. Conclusion: Building the Intelligent Core of Your Organization

AI infrastructure is the critical, enabling substrate that separates theoretical AI potential from tangible business value. It is a complex, multi-faceted investment that requires careful planning across compute, storage, networking, and software. There is no one-size-fits-all solution; the optimal architecture depends entirely on an organization’s specific use cases, data strategy, and financial model.

The journey begins not with a massive hardware purchase, but with a clear strategy. Start by understanding your AI workload pipeline, empower your teams with modern MLOps practices in the cloud, and scale deliberately towards a hybrid or on-premises environment as your needs mature. By viewing AI infrastructure as a strategic asset and building it with scalability, efficiency, and reproducibility in mind, you lay the groundwork for a future where intelligence is not just an experiment, but a core, competitive capability woven into the fabric of your organization. The time to build this foundation is now.