Liquid Cooling Adoption Transforms Data Centers

Liquid Cooling Adoption Transforms Data Centers

For decades, the familiar hum of whirring fans has been the universal soundtrack of the data center. Massive air conditioning systems and computer room air handlers (CRAHs) have been the default solution for managing the heat generated by servers. However, this long-standing paradigm is undergoing a radical transformation. As computational demands skyrocket, driven by artificial intelligence, machine learning, and high-performance computing (HPC), traditional air cooling is hitting its physical and economic limits. In its place, a more powerful and efficient technology is surging to the forefront: liquid cooling. This comprehensive analysis explores the rapid growth of liquid cooling adoption, examining the powerful drivers behind this shift, the different technological approaches available, the tangible benefits for businesses and the environment, and the future trajectory of this transformative technology that is redefining the very architecture of modern computing.

A. The Thermal Tipping Point: Why Air Cooling is No Longer Enough

The move to liquid cooling is not a mere incremental upgrade; it is a necessary response to fundamental shifts in computing hardware and density.

A. The Exponential Heat Generation of Modern Processors:

The end of Moore’s Law in its traditional form has led chip manufacturers to increase performance not just through smaller transistors, but through specialized architectures and higher power densities. Modern CPUs and, more critically, GPUs used for AI training now regularly exceed thermal design power (TDP) ratings of 400W to 700W per chip. Air, with its low thermal capacity and conductivity, struggles to remove this concentrated heat efficiently, leading to thermal throttling where processors slow down to prevent damage.

B. The Unstoppable Rise of AI and GPU-Dense Workloads:

Artificial intelligence is the single biggest catalyst for liquid cooling. A single AI training server rack can now consume 40kW, 60kW, or even more than 100kW of power—densities that are physically impossible for air to cool effectively. The sheer concentration of high-wattage GPUs in these systems creates thermal densities that render even the most powerful fans and air conditioners inadequate.

C. The Physical Limitations of Air as a Cooling Medium:

The laws of physics favor liquid. Water, for example, can transfer heat 3,000 times more effectively than air. Moving heat using air requires immense energy to push large volumes across components, leading to high electricity costs for fans and computer room air conditioning (CRAC) units. As rack densities climb past 20kW, the cost and inefficiency of moving enough air become prohibitive.

D. The Sustainability Imperative and PUE Goals:

Power Usage Effectiveness (PUE) has become a key metric for data center efficiency. Traditional air-cooled data centers often struggle to achieve PUEs below 1.5, meaning for every watt powering IT equipment, half a watt is used for cooling and power overhead. Liquid cooling systems can drive PUE down towards an ideal 1.02-1.1, dramatically reducing a facility’s total energy consumption and carbon footprint.

B. The Liquid Cooling Landscape: A Detailed Look at the Technologies

“Liquid cooling” is an umbrella term for several distinct technologies, each with its own implementation, advantages, and ideal use cases.

A. Direct-to-Chip (or Cold Plate) Cooling:

This is the most common form of liquid cooling currently being adopted in hyperscale and enterprise data centers.

-

How it Works: Small, metal cold plates are attached directly to high-heat components like CPUs and GPUs. A coolant, typically deionized water or a specialized dielectric fluid, is circulated through micro-channels in the plate, absorbing heat directly from the source. The heated liquid is then transported to a heat exchanger, where it is cooled, typically by facility water, and recirculated.

-

Pros:

-

Highly efficient at removing heat from specific hot spots.

-

Allows for higher compute density than air cooling.

-

Can be retrofitted into some existing server designs.

-

-

Cons:

-

Requires modification to servers and involves plumbing inside the rack.

-

Primarily cools only the primary components; other parts like RAM and power supplies may still require airflow.

-

-

Ideal For: High-performance computing clusters, AI training servers, and overclocked financial trading systems.

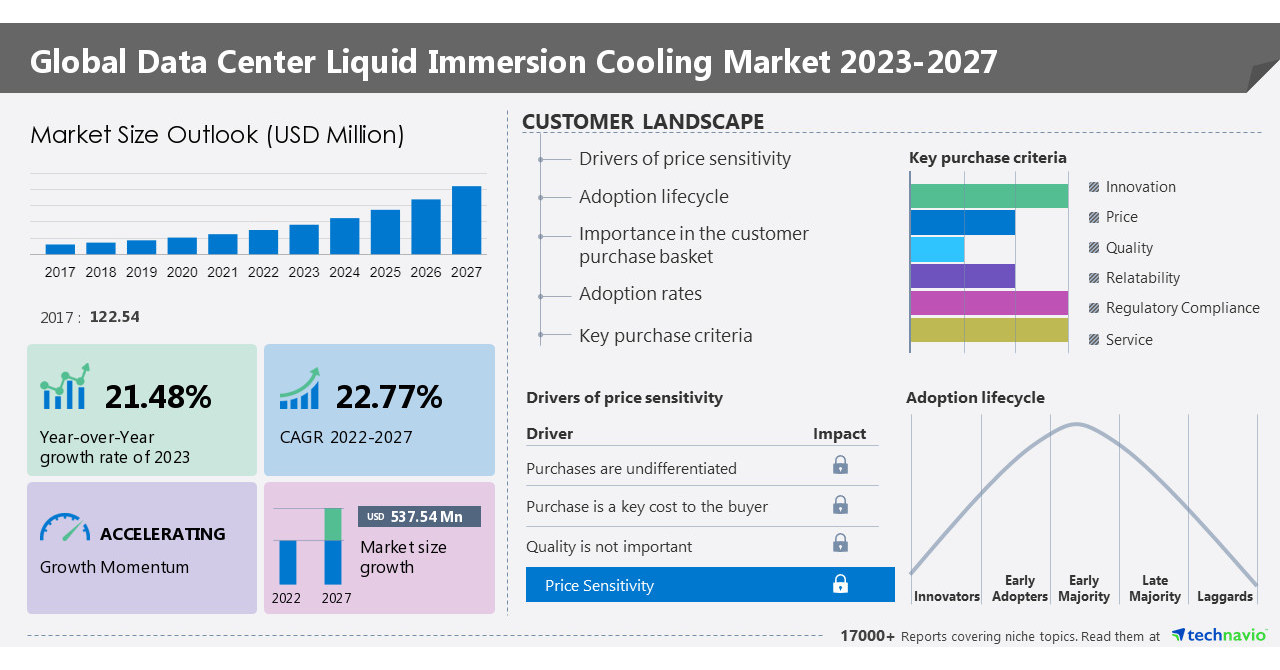

B. Immersion Cooling:

This is the most radical and efficient approach, representing a complete departure from air-based paradigms.

-

How it Works: Entire servers, or even full server racks, are submerged in a bath of non-conductive, non-flammable dielectric fluid. The fluid is thermally conductive but electrically insulating, meaning it can safely come into direct contact with all electronic components. The heat from the components causes the fluid to either circulate naturally (single-phase) or boil and condense (two-phase), transferring heat out of the tank to a external heat exchanger.

-

Pros:

-

Maximum Efficiency: Eliminates the need for fans entirely, reducing server power consumption by up to 15%.

-

Extreme Density: Enables rack power densities of 100kW and beyond.

-

Silent Operation: Creates a near-silent computing environment.

-

Hardware Longevity: Protects components from dust, corrosion, and moisture.

-

-

Cons:

-

Higher initial infrastructure cost for the tanks and fluid.

-

Server maintenance requires “fishing” components out of the fluid.

-

Requires a significant shift in data center operational procedures.

-

-

Ideal For: Cryptocurrency mining, large-scale AI model training, and future-forward data center designs aiming for ultimate efficiency.

C. Rear-Door Heat Exchangers (RDHx):

A less invasive form of liquid cooling that acts as a hybrid solution.

-

How it Works: A sealed door containing a coil of tubing filled with chilled water is installed on the back of a server rack. The hot air exhausted from the servers passes over the cold coils, and the heat is transferred to the water before the air can even re-enter the data hall.

-

Pros:

-

Easy to deploy; it is a simple rack-level upgrade.

-

No modifications to the servers are required.

-

Highly effective at capturing heat at the source.

-

-

Cons:

-

Less effective than direct-to-chip or immersion for the highest density racks.

-

Still relies on server fans to push air through the heat exchanger.

-

-

Ideal For: Upgrading existing data halls with rising densities, and for racks in the 20-40kW range.

C. The Compelling Business Case: Tangible Benefits of Adoption

The shift to liquid cooling is driven by a powerful combination of performance, economic, and environmental advantages.

A. Unlocking Maximum Computational Performance:

By eliminating thermal throttling, liquid cooling allows CPUs and GPUs to run at their maximum clock speeds continuously. For AI training jobs that can take weeks, this can reduce the total time to solution by days, providing a significant competitive advantage. It also enables reliable overclocking, extracting more performance from existing hardware.

B. Dramatic Reductions in Total Cost of Ownership (TCO):

While the initial capital expenditure can be higher, the operational savings are substantial.

-

Energy Savings: Eliminating or reducing CRAC units and thousands of server fans can cut a data center’s cooling energy load by 90% or more.

-

Increased Hardware Density: More compute power can be housed in the same physical footprint, reducing real estate costs.

-

Hardware Reliability: Cooler operating temperatures significantly extend the lifespan of IT equipment, reducing replacement costs and e-waste.

C. Achieving Sustainability and Environmental Goals:

Liquid cooling is a cornerstone of the green data center.

-

Lower PUE: As mentioned, it is the most effective path to achieving near-perfect PUE scores.

-

Water Conservation: Advanced liquid cooling systems can be designed as closed-loop circuits, consuming minimal to zero water compared to evaporative cooling towers, which is critical in water-stressed regions.

-

Waste Heat Reutilization: The heat captured by the liquid cooling loop is of a much higher grade and temperature than waste heat from air systems, making it economically viable to recycle for heating buildings, swimming pools, or industrial processes.

D. Enabling New Architectural Possibilities:

Liquid cooling breaks data centers free from the constraints of traditional raised floors and massive air handling units. This allows for more flexible facility designs and even the deployment of compute in non-traditional spaces, such as edge locations where space is limited and air conditioning is unavailable.

D. Navigating the Adoption Journey: Challenges and Considerations

Despite the clear benefits, the transition to liquid cooling is not without its hurdles.

A. The Skills and Knowledge Gap:

Data center technicians and operators are highly skilled in air-cooled environments. Adopting liquid cooling requires new training in fluid dynamics, plumbing, leak detection, and handling dielectric fluids. This represents a significant cultural and operational shift.

B. Perceived Risk and Leak Mitigation:

The fear of water and electronics mixing is deeply ingrained. While modern systems use leak-proof quick-disconnect fittings and dielectric fluids that are harmless to electronics, risk perception remains a barrier. Manufacturers are addressing this with robust containment systems and advanced leak detection sensors.

C. Initial Capital Investment and ROI Calculation:

The upfront cost of liquid-cooled servers, racks, and facility-side heat rejection systems is higher than traditional air. A detailed, long-term TCO analysis that factors in energy savings, density improvements, and hardware longevity is essential to justify the investment.

D. Ecosystem Immaturity and Vendor Lock-In:

While growing rapidly, the liquid cooling ecosystem is still more fragmented than the mature air-cooling market. Organizations must carefully evaluate vendor roadmaps, industry standards (like the Open Compute Project’s guidelines), and the long-term viability of specific cooling technologies to avoid proprietary lock-in.

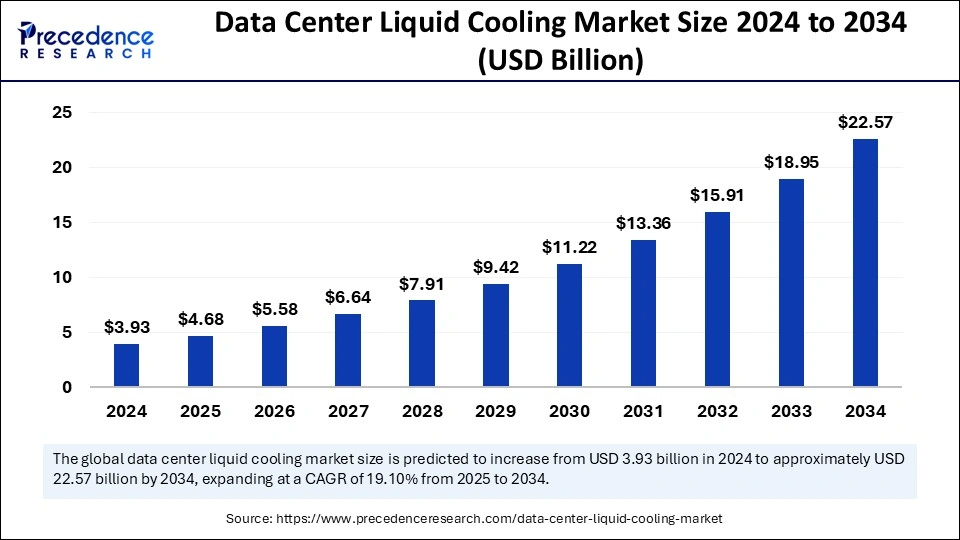

E. The Future Trajectory: Where Liquid Cooling is Headed

The adoption of liquid cooling is accelerating and will fundamentally reshape data center design in the coming years.

A. The Mainstreaming of Immersion Cooling for AI:

As AI becomes embedded in every industry, immersion cooling will become the standard for training and inferencing clusters. Major cloud providers are already deploying it at scale, and it will trickle down to enterprise and colocation facilities.

B. The Rise of Coolant-as-a-Service (CaaS):

To overcome upfront cost barriers, we will see the emergence of service models where customers pay a monthly fee for the cooling infrastructure and fluid management, similar to a Hardware-as-a-Service model.

C. Direct Liquid Cooling for the Chiplet Era:

The next evolution will see cooling integrated directly into the processor package itself. As chiplet-based designs become standard, micro-channels for liquid could be built directly into the interposer or substrate, allowing for cooling at an unprecedented microscopic level.

D. Standardization and Modular Deployment:

The industry will coalesce around standard form factors and connectors for liquid-cooled racks. This will enable pre-fabricated, modular data center pods that are fully liquid-cooled, allowing for rapid deployment and scaling of compute capacity.

Conclusion: The Inevitable Transition to a Liquid-Cooled Future

The growth of liquid cooling adoption marks a fundamental inflection point in the history of computing infrastructure. It is a clear acknowledgment that the future of computation—a future defined by artificial intelligence, exascale computing, and global digital services—cannot be built on a foundation of inefficient 20th-century cooling technology. The transition from air to liquid is as significant as the move from vacuum tubes to transistors. While challenges around cost, skills, and perception remain, the overwhelming benefits in performance, efficiency, and sustainability make this shift not just desirable, but inevitable. For data center operators, IT managers, and business leaders, the question is no longer if they will adopt liquid cooling, but when and how. Those who proactively embrace this transformation will be best positioned to harness the full power of next-generation technologies, achieve their environmental goals, and maintain a decisive competitive edge in the data-driven economy of tomorrow.