Serverless Computing Revolutionizes Application Development

The landscape of software development and deployment is undergoing its most significant transformation since the advent of the cloud itself. We are moving beyond the era of provisioning virtual machines and managing container orchestrators into a new paradigm where the very concept of a “server” fades into the background. This paradigm is serverless computing, and it is fundamentally changing how developers build, deploy, and scale applications. By abstracting away the underlying infrastructure entirely, serverless allows teams to focus purely on code and business logic, triggering a seismic shift in operational models, cost structures, and development velocity. This in-depth analysis explores the serverless revolution, detailing its core principles, profound benefits, practical use cases, and the challenges that organizations must navigate to fully harness its transformative potential.

A. Deconstructing Serverless: Beyond the Misleading Name

The term “serverless” is arguably one of the most misunderstood in modern technology. It does not mean that servers are absent; rather, it signifies that their management is entirely invisible to the developer.

A. The Core Concept: Function-as-a-Service (FaaS):

At the heart of serverless computing is the FaaS model. Developers write discrete, single-purpose functions—small blocks of code designed to perform a specific task.

-

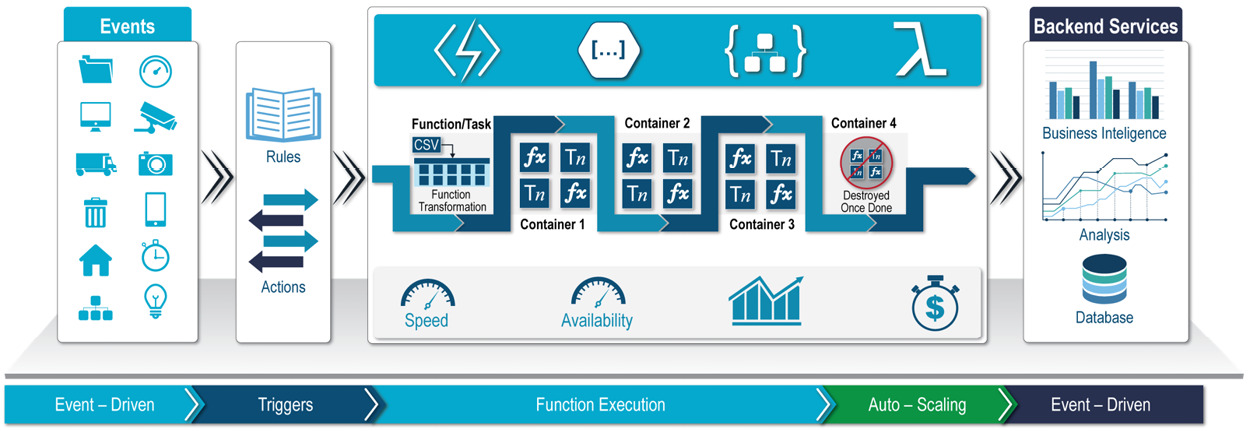

Event-Driven Execution: These functions remain dormant until a specific trigger or event occurs. This event could be an HTTP request, a new file uploaded to cloud storage, a new database entry, a scheduled timer, or a message arriving in a queue.

-

Stateless by Design: Each function execution is typically stateless, meaning it does not retain any memory of previous executions. Any required state must be stored in an external service like a database or cache.

-

Ephemeral Containers: When an event triggers a function, the cloud provider dynamically allocates a container to run it. After execution (typically within seconds or minutes), the container is destroyed. This is the foundation of the unparalleled scalability and cost model.

B. The Supporting Ecosystem: Backend-as-a-Service (BaaS):

Serverless architecture often extends beyond FaaS to include a full suite of managed services that replace traditional backend components.

-

Managed Databases: Services like Amazon DynamoDB, Google Firestore, or Azure Cosmos DB that handle scaling, patching, and backups automatically.

-

Authentication Services: Platforms like AWS Cognito or Auth0 that manage user sign-up, login, and access control without any server management.

-

API Gateways: Managed services that act as a front-door for functions, handling request routing, throttling, and authorization.

C. The Fundamental Shift in Responsibility:

Serverless redefines the shared responsibility model between the cloud provider and the customer.

-

Cloud Provider Manages: The physical hardware, virtual machine management, hypervisors, operating system runtime, and resource scaling.

-

Developer Focuses On: The application code, business logic, and the data. The operational burden of “servers” is eliminated.

B. The Compelling Advantages: Why Serverless is a Game-Changer

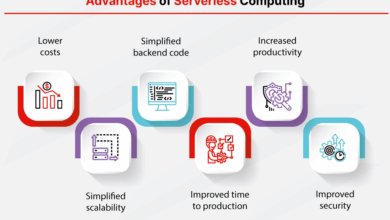

The adoption of serverless computing is driven by a powerful combination of economic, operational, and technical benefits that deliver tangible value.

A. The Revolutionary Granular Cost Model:

Serverless operates on a true pay-per-use pricing model. You are billed for the exact compute time your code consumes, measured in millisecond increments, and the number of times it is executed.

-

Zero Cost When Idle: Unlike a provisioned virtual machine or container that incurs costs 24/7, a serverless function costs nothing when it is not running. This makes it exceptionally cost-effective for applications with sporadic, unpredictable traffic patterns.

-

No Over-Provisioning: Organizations no longer need to pay for peak capacity that sits idle 90% of the time. This eliminates one of the biggest sources of cloud waste.

-

Reduced Operational Overhead: The savings extend beyond compute costs to include reduced spending on system administration, monitoring software, and DevOps tools dedicated to infrastructure management.

B. Built-In, Infinite, and Automatic Scalability:

This is one of the most powerful features of serverless architecture.

-

From Zero to Millions of Requests: A function can scale from zero executions to handling thousands of concurrent requests per second without any developer intervention. The cloud provider’s infrastructure automatically provisions the necessary compute resources in real-time.

-

Handling “Flash Crowds” and Viral Growth: For applications that might experience sudden, unexpected traffic spikes, serverless provides inherent resilience. There is no risk of the infrastructure being overwhelmed, as it scales horizontally almost infinitely.

C. Unprecedented Development Velocity and Agility:

By removing infrastructure concerns, serverless dramatically accelerates the software development lifecycle.

-

Focus on Business Logic: Developers can dedicate 100% of their time to writing features that provide customer value, rather than wrestling with configuration management, load balancers, or OS security patches.

-

Simplified Deployment: Deploying a new version of a function is often as simple as uploading code. CI/CD pipelines become significantly simpler and more focused.

-

Microservices Made Easy: Serverless is the perfect architectural fit for microservices, allowing each service to be developed, deployed, and scaled independently with minimal operational overhead.

D. Enhanced Reliability and Fault Tolerance:

Cloud providers manage serverless runtimes across multiple availability zones by default.

-

Built-In High Availability: Functions are inherently distributed, reducing the impact of a single hardware or software failure within a data center.

-

Automated Management: The cloud provider handles all routine maintenance, security patching, and infrastructure failures transparently, leading to a more robust and secure application platform.

C. Practical Applications: Where Serverless Excels

Serverless computing is not a one-size-fits-all solution, but it is exceptionally well-suited for a wide range of modern application patterns.

A. Event-Driven Data Processing Pipelines:

This is a classic and powerful use case for serverless functions.

-

Real-Time File Processing: Trigger a function automatically when a new image is uploaded to cloud storage to generate thumbnails, extract metadata, or perform virus scanning.

-

Stream Processing: Process data in real-time from streams (like Amazon Kinesis or Google Pub/Sub) for activities such as data enrichment, filtering, and aggregation before loading it into a data warehouse.

-

ETL (Extract, Transform, Load) Jobs: Replace scheduled, monolithic ETL servers with functions that run only when new data arrives, making the pipeline more efficient and event-driven.

B. Modern Web and API Backends:

Serverless is ideal for building the backend for web and mobile applications.

-

RESTful APIs: Using an API Gateway to trigger functions for each endpoint (e.g.,

/getUser,/createOrder). This creates a highly scalable and cost-effective backend. -

Mobile Backends: Building a BaaS architecture where mobile app interactions directly trigger serverless functions for business logic, while using managed databases and authentication services.

C. Scheduled Tasks and Automation:

Replacing traditional cron jobs running on dedicated servers.

-

Daily Report Generation: A function triggered by a timer that aggregates data and emails a report every morning.

-

Database Cleanup: A nightly function that archives or deletes old records.

-

Health Checks: A function that runs every minute to check the status of external APIs and sends an alert if an issue is detected.

D. Internet of Things (IoT) Backends:

IoT environments generate massive volumes of small, intermittent messages from thousands of devices.

-

Data Ingestion: Functions can be triggered by each device telemetry message, validating, processing, and routing it to the appropriate storage or analytics service.

-

Command and Control: Sending commands back to devices by triggering functions through an API.

D. Navigating the Challenges: The Realities of Going Serverless

While the benefits are profound, a successful serverless strategy requires carefully addressing its inherent challenges.

A. The Cold Start Problem:

A “cold start” is the latency incurred when a function is invoked after a period of inactivity. The provider must provision a new container, load the code, and bootstrap the runtime.

-

Impact: This can add several hundred milliseconds to a few seconds of latency, which is unacceptable for user-facing applications requiring immediate response.

-

Mitigation Strategies: Using provisioned concurrency (keeping a number of instances warm), optimizing package size, and using faster runtimes (e.g., Go, Python over Java).

B. Monitoring and Debugging Complexity:

Traditional monitoring tools designed for static servers struggle in a serverless environment.

-

Distributed Tracing: A single user request might traverse a dozen separate functions, making it difficult to track performance and errors. Tools like AWS X-Ray are essential.

-

Log Aggregation: Logs are generated by ephemeral containers and must be centrally collected into a service like Amazon CloudWatch Logs or a third-party solution for analysis.

C. Vendor Control and Potential Lock-In:

Your application’s execution model, APIs, and supporting services are tightly coupled to your cloud provider’s specific serverless implementation.

-

Strategy: Adopting open-source frameworks like the Serverless Framework or using multi-cloud abstraction layers can help mitigate this risk, though it often comes at the cost of losing native optimizations.

D. Architectural Complexity and State Management:

Designing a complex application as a collection of hundreds of stateless functions requires a different architectural mindset.

-

Orchestration: Coordinating multiple functions into a cohesive workflow requires stateful orchestration tools like AWS Step Functions.

-

State Management: The stateless nature of functions mandates the use of external databases or caches for any persistent data, which can introduce latency and complexity.

E. The Future Trajectory: Where Serverless is Headed

The serverless evolution is far from over. Several key trends are shaping its future.

A. The Rise of Serverless Containers:

Services like AWS Fargate and Google Cloud Run are bridging the gap between containers and serverless. They allow developers to package applications in containers while still enjoying a serverless, pay-per-use model, providing more flexibility than the FaaS model.

B. The Expansion to the Edge:

Serverless functions are being deployed to edge locations, enabling code to run closer to end-users. This dramatically reduces latency for global applications and is ideal for tasks like A/B testing, bot mitigation, and personalization.

C. Specialized Serverless Databases and Services:

The ecosystem is expanding with purpose-built, serverless databases (like Amazon Aurora Serverless) and analytics engines that automatically scale their compute and capacity based on demand, further reducing operational overhead.

D. Improved Developer Experience and Tooling:

The tooling ecosystem is maturing rapidly, with better local testing environments, more powerful debugging capabilities, and frameworks that simplify the deployment and management of large serverless applications.

Conclusion: Embracing the Event-Driven Future

Serverless computing represents more than just a new way to run code; it embodies a fundamental shift in how we conceptualize and build software systems. It prioritizes agility, efficiency, and developer productivity above all else. While it introduces new challenges around monitoring, architecture, and vendor relationships, the benefits of reduced operational overhead, infinite scalability, and a granular cost model are simply too compelling to ignore.

For startups, it levels the playing field, allowing them to build robust, global-scale applications with a tiny team. For enterprises, it offers a path to break down monolithic applications, accelerate digital transformation, and significantly reduce cloud spend. The future of application development is event-driven, granular, and abstracted from infrastructure concerns. Serverless computing is not just an option on the table; it is the architectural foundation for the next generation of cloud-native innovation. The revolution is here, and it is changing everything.