Ultimate Low Latency Hosting Solutions Guide

A. The Critical Imperative of Low Latency in the Modern Digital Landscape

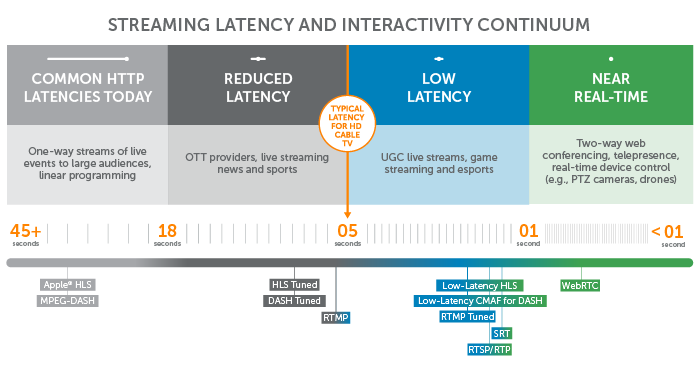

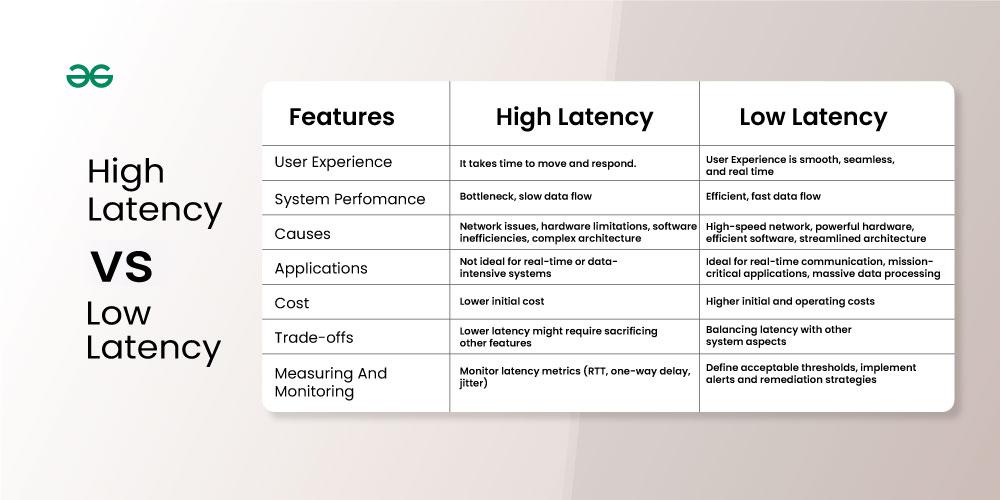

In the hyper-competitive digital arena, where user attention is the most valuable currency, speed is no longer a luxury—it is a fundamental requirement for survival and success. The concept of “low latency hosting” has moved from a technical consideration for a niche few to a core business strategy for any serious online entity. But what exactly is latency? In simple terms, latency is the delay, measured in milliseconds (ms), between a user’s action (like clicking a link) and the web server’s response to that action. It is the digital equivalent of reaction time. Low latency hosting, therefore, is the practice of architecting your web infrastructure to minimize this delay to the absolute minimum, creating a seamless, instantaneous, and engaging experience for your audience, regardless of their geographic location.

The impact of latency is profound and multifaceted. A delay of just a few hundred milliseconds can directly translate into increased bounce rates, reduced conversion rates, lower search engine rankings, and ultimately, lost revenue. This comprehensive guide will serve as your definitive resource for understanding, implementing, and benefiting from low latency hosting solutions. We will explore the technical causes of delay, the arsenal of solutions available—from Content Delivery Networks (CDNs) to edge computing—and provide a strategic blueprint for making your website blazingly fast on a global scale.

B. Deconstructing Latency: The Hidden Delays in Data Transmission

To effectively combat latency, one must first understand its root causes. Latency is not a single problem but the sum of several smaller delays that occur as data travels across the internet.

A. Propagation Delay: The Laws of Physics

This is the time it takes for a data packet to travel from the source (your server) to the destination (the user’s device) at the speed of light through a fiber optic cable. This is the absolute physical limit. While light is fast, distances matter. A user in Sydney trying to access a server in London will inherently experience higher propagation delay than a user in Paris accessing the same server.

B. Transmission Delay: The Bandwidth Bottleneck

This is the time it takes to push all the data packets of a file onto the network link. It is directly influenced by the bandwidth of the connection. A larger file (like a high-resolution image or video) will take longer to transmit over a given bandwidth than a small text file.

C. Processing Delay: The Server’s Workload

This is the time the web server takes to process the request and generate a response. Factors that affect processing delay include server CPU power, RAM availability, the efficiency of the application code (e.g., PHP, Python, Node.js), and database query speed. An overloaded or poorly optimized server will have high processing delays.

D. Routing and Network Congestion: The Internet’s Traffic Jams

As data packets traverse the internet, they pass through multiple routers and switches. Each “hop” adds a tiny amount of delay. Furthermore, if a particular network path is congested with heavy traffic, packets may be queued, leading to significant jitter (inconsistent latency) and increased overall delay.

C. The Arsenal of Low Latency Hosting Solutions

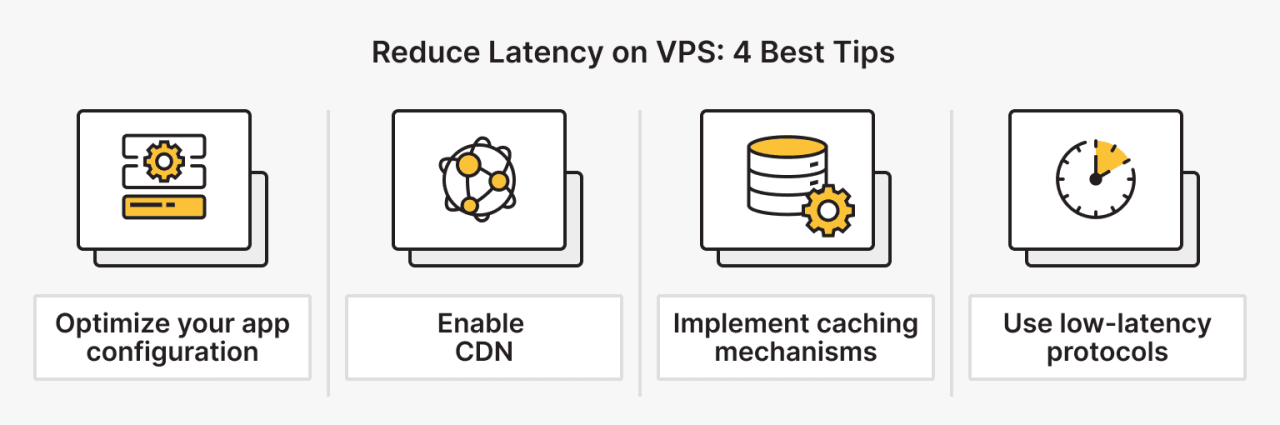

Achieving low latency requires a multi-pronged approach that addresses each of the delay types mentioned above. Here are the most effective solutions.

A. Content Delivery Networks (CDNs): The First Line of Defense

A CDN is a globally distributed network of proxy servers deployed in multiple data centers. Its primary purpose is to serve content to users from a geographically proximate server, thereby dramatically reducing propagation delay.

-

How it Works: Instead of all user requests going to your single origin server, they are intelligently routed to the nearest CDN “edge” server. The CDN caches static content like images, CSS, JavaScript, and videos on these edge servers.

-

Impact on Latency: When a user requests a cached file, it is delivered from an edge server that might be only a few dozen miles away, rather than a continent away. This can reduce load times for static assets from hundreds of milliseconds to tens of milliseconds.

-

Key Providers: Cloudflare, Akamai, Amazon CloudFront, and Fastly are industry leaders, offering vast global networks.

B. Strategic Global Server Placement

The physical location of your primary web server (origin server) remains critically important. If your entire user base is concentrated in Western Europe, hosting your server in a data center in Frankfurt or Amsterdam is far superior to hosting it in California.

-

Strategy: Use analytics tools to identify the geographic distribution of your audience. Choose a hosting provider that offers data center locations in or near your core markets. For a globally dispersed audience, a multi-region hosting strategy, potentially combined with a CDN, becomes essential.

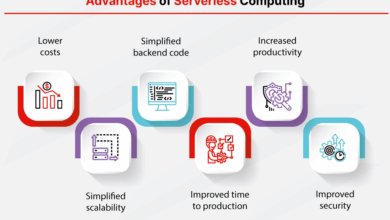

C. Edge Computing: The Next Evolution

While CDNs cache static content, edge computing takes it a step further by executing application logic at the edge of the network. This means parts of your dynamic website (like personalization, API calls, or A/B testing logic) can be processed on an edge server close to the user.

-

Benefit: This drastically reduces the round-trip time for dynamic requests that would otherwise have to travel all the way back to the origin server. For interactive web applications, real-time gaming, and IoT devices, edge computing is a game-changer for latency reduction.

-

Examples: Cloudflare Workers, AWS Lambda@Edge, and Vercel’s Edge Functions are prominent platforms enabling this technology.

D. High-Performance Web Hosting Infrastructure

The type of hosting you choose has a monumental impact on processing delay.

-

Bare Metal Servers vs. Virtual Servers: For the most latency-sensitive applications, bare metal servers can offer superior performance by eliminating the “virtualization overhead” of a hypervisor. However, modern virtual servers (like AWS’s C5 instances or Google’s C2 instances) are highly optimized and often provide an excellent balance of performance and flexibility.

-

Solid-State Drives (SSDs): Ensure your hosting provider uses NVMe SSDs for all storage. The I/O (Input/Output) speed of SSDs is orders of magnitude faster than traditional hard disk drives (HDDs), leading to faster database queries and file access times.

-

Optimized Web Servers and Software: Using high-performance web server software like Nginx or OpenLiteSpeed instead of the more traditional Apache can reduce processing overhead. Similarly, using the latest versions of PHP with an OPcache, or a leaner runtime like Node.js, can shave precious milliseconds off the server response time.

E. Advanced Network and Protocol Optimizations

The underlying network protocols can also be optimized for speed.

-

HTTP/2 and HTTP/3: Upgrade from the aging HTTP/1.1 protocol. HTTP/2 allows for multiplexing (sending multiple files over a single connection) and server push, reducing the number of round trips needed. HTTP/3, which uses the QUIC transport protocol, is designed to further reduce latency, especially on unstable mobile networks.

-

Anycast Networking: This is a routing technique where the same IP address is announced from multiple locations around the world. User requests are automatically routed to the topologically nearest data center. This is commonly used for DNS (e.g., Cloudflare’s 1.1.1.1) and can significantly reduce the initial DNS lookup time.

D. The Tangible Business Benefits of Investing in Low Latency

The investment in a low-latency infrastructure is justified by a compelling return on investment across several key business metrics.

A. Enhanced User Experience and Engagement

A fast website is a pleasure to use. Users are more likely to stay longer, explore more pages, and interact with your content. A slow, laggy website creates frustration and signals a lack of professionalism.

B. Superior Search Engine Optimization (SEO) Rankings

Since 2010, Google has explicitly used page speed as a ranking factor for desktop searches, and with the “Speed Update” in 2018, for mobile searches as well. Core Web Vitals—a set of metrics including Largest Contentful Paint (LCP), First Input Delay (FID), and Cumulative Layout Shift (CLS)—are now direct ranking signals. A low-latency infrastructure is fundamental to achieving good scores on these metrics.

C. Directly Improved Conversion Rates and Revenue

The correlation between speed and conversions is well-documented. Studies by industry giants like Amazon and Google have shown that delays of even 100 milliseconds can have a measurable negative impact on conversion rates. For an e-commerce site, this translates directly to lost sales. A faster site builds trust and removes friction from the purchasing process.

D. Competitive Advantage in the Market

In a world where many businesses still neglect performance, having a noticeably faster website can be a powerful differentiator. It becomes a unique selling proposition (USP) that can attract and retain customers who value a smooth and efficient online experience.

E. A Step-by-Step Action Plan for Achieving Low Latency

Transforming your website into a low-latency powerhouse requires a systematic approach.

A. Step 1: Comprehensive Performance Auditing

You cannot improve what you cannot measure.

-

Tools: Use tools like GTmetrix, Pingdom, and Google PageSpeed Insights to get a baseline performance score.

-

Core Web Vitals: Use Google Search Console to see your field data for Core Web Vitals.

-

Traceroute: Use

traceroute(ormtr) command-line tools to see the network path to your server and identify problematic hops.

B. Step 2: Implement a Robust CDN

This is the single most impactful step for a globally distributed audience. Sign up with a reputable CDN provider, integrate it with your website, and configure it to cache all static assets. Most CDNs offer easy-to-follow setup guides.

C. Step 3: Optimize Your Hosting Foundation

-

Evaluate Your Provider: Is your current hosting plan sufficient? Consider upgrading to a provider with a global presence, SSD storage, and modern CPU architectures.

-

Server Stack Tuning: Work with your sysadmin or developer to optimize your web server (Nginx/Apache) configuration, enable caching (like Varnish or Redis), and ensure database indexes are properly set up.

D. Step 4: Implement Advanced Optimizations

-

Enable HTTP/2/HTTP/3: Contact your hosting provider to ensure HTTP/2 is enabled. For HTTP/3, you may need to use a provider like Cloudflare that offers broad support.

-

Image and Code Optimization: Compress and serve images in modern formats like WebP. Minify your CSS and JavaScript files to reduce their transmission size.

E. Step 5: Continuous Monitoring and Iteration

Performance optimization is not a one-time task. Continuously monitor your website’s speed using the tools mentioned. Set up automated alerts for performance regressions and be prepared to iterate on your configuration as your traffic and content evolve.

F. Future-Proofing: The Horizon of Low-Latency Technologies

The pursuit of lower latency is relentless. Several emerging technologies promise to push the boundaries even further.

A. 5G Networks

The rollout of 5G cellular technology promises not just faster download speeds but significantly lower latency for mobile users, potentially dropping to 1ms in ideal conditions. This will enable a new generation of real-time mobile applications.

B. WebAssembly (Wasm)

WebAssembly allows code written in languages like C++, Rust, and Go to run in the browser at near-native speed. This can dramatically improve the performance of complex web applications, reducing the processing load on the client and enabling faster interactivity.

C. AI-Powered Optimization

Artificial intelligence and machine learning are beginning to be used to dynamically optimize content delivery. AI can predict user behavior to pre-fetch and cache content before it’s even requested, creating a perceived zero-latency experience.

G. Conclusion: Speed as a Strategic Business Imperative

Low latency hosting is far more than a technical checkbox; it is a comprehensive strategy that touches upon user psychology, search engine algorithms, and your company’s bottom line. In an era where digital expectations have never been higher, the speed of your website is a direct reflection of your brand’s commitment to quality and user satisfaction.

By understanding the sources of delay, leveraging the powerful combination of CDNs, strategic hosting, and edge computing, and following a disciplined action plan, you can transform your web property from a sluggish digital brochure into a dynamic, high-performance engine for growth. The investment you make in low latency today is an investment in customer satisfaction, competitive advantage, and long-term commercial success. The time to act is now, before your competitors do.